Health Monitoring

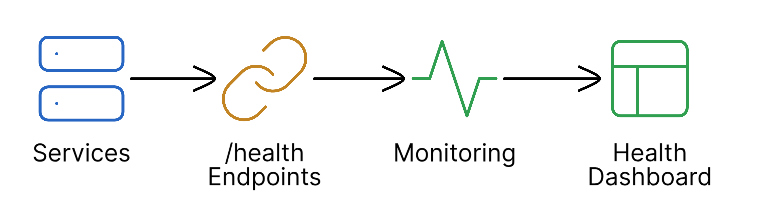

This guide covers Anava's health check endpoints, integration with monitoring systems, and alert configuration for maintaining operational visibility.

Overview

Anava exposes standardized health endpoints for monitoring system availability and readiness. These endpoints follow industry best practices and integrate with common monitoring platforms.

Health Endpoint Types

| Endpoint | Purpose | When to Use |

|---|---|---|

/health | Liveness check | Verify service is running |

/ready | Readiness check | Verify service can handle requests |

Health Endpoint (/health)

The /health endpoint provides a lightweight liveness check. It verifies the service process is running and can respond to requests.

Request

curl -X GET https://api.anava.ai/health

Response Format

Healthy Response (200 OK):

{

"status": "healthy",

"timestamp": "2024-01-15T10:30:00Z",

"version": "2.4.1",

"uptime": 86400

}

Unhealthy Response (503 Service Unavailable):

{

"status": "unhealthy",

"timestamp": "2024-01-15T10:30:00Z",

"version": "2.4.1",

"error": "Service initialization failed"

}

Response Fields

| Field | Type | Description |

|---|---|---|

status | string | healthy or unhealthy |

timestamp | ISO 8601 | Current server time |

version | string | Service version |

uptime | integer | Seconds since service start |

error | string | Error message (only when unhealthy) |

Status Codes

| Code | Meaning | Action |

|---|---|---|

200 | Service healthy | No action needed |

503 | Service unhealthy | Investigate immediately |

Readiness Endpoint (/ready)

The /ready endpoint performs comprehensive dependency checks. It verifies the service can successfully handle requests by validating all critical dependencies.

Request

curl -X GET https://api.anava.ai/ready

Response Format

Ready Response (200 OK):

{

"status": "ready",

"timestamp": "2024-01-15T10:30:00Z",

"version": "2.4.1",

"checks": {

"database": {

"status": "healthy",

"latency_ms": 12

},

"mqtt": {

"status": "healthy",

"latency_ms": 8

},

"storage": {

"status": "healthy",

"latency_ms": 25

},

"auth": {

"status": "healthy",

"latency_ms": 15

}

}

}

Not Ready Response (503 Service Unavailable):

{

"status": "not_ready",

"timestamp": "2024-01-15T10:30:00Z",

"version": "2.4.1",

"checks": {

"database": {

"status": "healthy",

"latency_ms": 12

},

"mqtt": {

"status": "unhealthy",

"error": "Connection refused",

"latency_ms": null

},

"storage": {

"status": "healthy",

"latency_ms": 25

},

"auth": {

"status": "healthy",

"latency_ms": 15

}

}

}

Dependency Checks

| Check | What It Validates |

|---|---|

database | Firestore connection and read access |

mqtt | MQTT broker connectivity |

storage | Cloud Storage bucket access |

auth | Firebase Auth service availability |

Status Codes

| Code | Meaning | Action |

|---|---|---|

200 | All dependencies healthy | No action needed |

503 | One or more dependencies unhealthy | Check failed dependencies |

Latency Thresholds

| Check | Warning | Critical |

|---|---|---|

| Database | > 100ms | > 500ms |

| MQTT | > 50ms | > 200ms |

| Storage | > 200ms | > 1000ms |

| Auth | > 100ms | > 500ms |

Monitoring Integration

Google Cloud Monitoring

Create an uptime check in Cloud Monitoring:

Console Setup:

- Go to Monitoring > Uptime checks

- Click Create Uptime Check

- Configure:

- Protocol: HTTPS

- Resource Type: URL

- Hostname:

api.anava.ai - Path:

/health - Check frequency: 1 minute

- Configure alerting policy

Terraform Configuration:

resource "google_monitoring_uptime_check_config" "health" {

display_name = "Anava Health Check"

timeout = "10s"

period = "60s"

http_check {

path = "/health"

port = 443

use_ssl = true

validate_ssl = true

accepted_response_status_codes {

status_class = "STATUS_CLASS_2XX"

}

}

monitored_resource {

type = "uptime_url"

labels = {

project_id = var.project_id

host = "api.anava.ai"

}

}

}

resource "google_monitoring_uptime_check_config" "readiness" {

display_name = "Anava Readiness Check"

timeout = "30s"

period = "300s"

http_check {

path = "/ready"

port = 443

use_ssl = true

validate_ssl = true

accepted_response_status_codes {

status_class = "STATUS_CLASS_2XX"

}

}

monitored_resource {

type = "uptime_url"

labels = {

project_id = var.project_id

host = "api.anava.ai"

}

}

}

Datadog

Configure Datadog HTTP checks:

datadog.yaml:

init_config:

instances:

- name: Anava Health

url: https://api.anava.ai/health

method: GET

timeout: 10

http_response_status_code: 200

collect_response_time: true

tags:

- service:anava

- env:production

- check_type:liveness

- name: Anava Readiness

url: https://api.anava.ai/ready

method: GET

timeout: 30

http_response_status_code: 200

collect_response_time: true

tags:

- service:anava

- env:production

- check_type:readiness

Dashboard Query:

avg:http.can_connect{service:anava,check_type:liveness} by {env}

Prometheus

Configure Prometheus blackbox exporter:

blackbox.yml:

modules:

http_health:

prober: http

timeout: 10s

http:

valid_http_versions: ["HTTP/1.1", "HTTP/2.0"]

valid_status_codes: [200]

method: GET

preferred_ip_protocol: "ip4"

http_ready:

prober: http

timeout: 30s

http:

valid_http_versions: ["HTTP/1.1", "HTTP/2.0"]

valid_status_codes: [200]

method: GET

preferred_ip_protocol: "ip4"

prometheus.yml:

scrape_configs:

- job_name: 'anava-health'

metrics_path: /probe

params:

module: [http_health]

static_configs:

- targets:

- https://api.anava.ai/health

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox-exporter:9115

- job_name: 'anava-readiness'

metrics_path: /probe

params:

module: [http_ready]

static_configs:

- targets:

- https://api.anava.ai/ready

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox-exporter:9115

Prometheus Alert Rules:

groups:

- name: anava-health

rules:

- alert: AnavaServiceDown

expr: probe_success{job="anava-health"} == 0

for: 2m

labels:

severity: critical

annotations:

summary: "Anava service is down"

description: "Health check has been failing for more than 2 minutes"

- alert: AnavaServiceNotReady

expr: probe_success{job="anava-readiness"} == 0

for: 5m

labels:

severity: warning

annotations:

summary: "Anava service is not ready"

description: "Readiness check has been failing for more than 5 minutes"

- alert: AnavaHighLatency

expr: probe_duration_seconds{job="anava-health"} > 1

for: 5m

labels:

severity: warning

annotations:

summary: "Anava health check latency is high"

description: "Health check latency exceeds 1 second"

Alert Configuration

Alert Severity Levels

| Severity | Condition | Response Time | Notification |

|---|---|---|---|

| Critical | Service down > 2 min | Immediate | PagerDuty, SMS |

| Warning | Degraded > 5 min | 15 minutes | Email, Slack |

| Info | Latency spike | Next business day |

Alert Policies

Cloud Monitoring Alert Policy (Terraform):

resource "google_monitoring_alert_policy" "health_alert" {

display_name = "Anava Health Alert"

combiner = "OR"

conditions {

display_name = "Health Check Failure"

condition_threshold {

filter = "metric.type=\"monitoring.googleapis.com/uptime_check/check_passed\" AND resource.type=\"uptime_url\""

comparison = "COMPARISON_LT"

threshold_value = 1

duration = "120s"

aggregations {

alignment_period = "60s"

per_series_aligner = "ALIGN_NEXT_OLDER"

}

}

}

notification_channels = [

google_monitoring_notification_channel.email.name,

google_monitoring_notification_channel.pagerduty.name,

]

alert_strategy {

auto_close = "1800s"

}

}

Notification Channels

Configure multiple notification channels for redundancy:

| Channel | Use Case | Configuration |

|---|---|---|

| All alerts | Team distribution list | |

| Slack | Warning+ | #ops-alerts channel |

| PagerDuty | Critical | On-call rotation |

| SMS | Critical | On-call phone |

Example Slack Webhook Integration:

# Test notification

curl -X POST https://hooks.slack.com/services/YOUR/WEBHOOK/URL \

-H 'Content-Type: application/json' \

-d '{

"text": "Anava Health Alert",

"attachments": [{

"color": "danger",

"fields": [{

"title": "Status",

"value": "Service Unavailable",

"short": true

}, {

"title": "Environment",

"value": "Production",

"short": true

}]

}]

}'

Best Practices

Health Check Frequency

| Environment | /health Interval | /ready Interval |

|---|---|---|

| Production | 30-60 seconds | 1-5 minutes |

| Staging | 1-2 minutes | 5-10 minutes |

| Development | 5 minutes | 15 minutes |

Timeout Configuration

| Endpoint | Recommended Timeout | Max Timeout |

|---|---|---|

/health | 5 seconds | 10 seconds |

/ready | 15 seconds | 30 seconds |

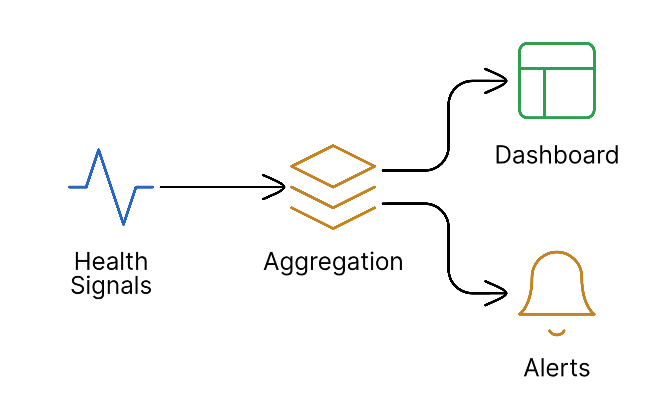

Monitoring Dashboard

Create a unified dashboard with these panels:

- Service Availability - Uptime percentage over time

- Response Latency - P50, P95, P99 latency

- Dependency Health - Individual dependency status

- Error Rate - Failed checks over time

- Alerts - Active and recent alerts

Compliance Coverage

Health monitoring supports the following compliance requirements:

SOC 2 Type II

| Control | Requirement | How Health Monitoring Addresses |

|---|---|---|

| A1.2 | System availability monitoring | /health endpoint provides continuous availability verification with configurable alerting |

| A1.2 | Recovery procedures | Alert policies enable rapid incident response; documented escalation paths |

| CC7.2 | Monitoring for security events | Health checks detect service disruptions that may indicate security incidents |

ISO 27001:2022

| Control | Requirement | How Health Monitoring Addresses |

|---|---|---|

| A.12.1.3 | Capacity monitoring | /ready endpoint monitors dependency health and latency thresholds |

| A.12.4.1 | Event logging | Health check results logged for audit trail |

| A.17.1.1 | Information security continuity | Automated monitoring ensures rapid detection of availability issues |

Audit Evidence

Health monitoring provides audit evidence through:

- Uptime Reports - Historical availability metrics

- Alert History - Record of incidents and response times

- Latency Trends - Performance over time

- Dependency Status - Component-level health history

Export Health Metrics for Audit:

# Export last 30 days of health check results

gcloud monitoring time-series list \

--project=anava-ai \

--filter='metric.type="monitoring.googleapis.com/uptime_check/check_passed"' \

--interval='start="2024-01-01T00:00:00Z",end="2024-01-31T23:59:59Z"' \

--format=json > health-audit-report.json

Troubleshooting

Health Check Failing

-

Check service logs

gcloud functions logs read --project=anava-ai --limit=50 -

Verify network connectivity

curl -v https://api.anava.ai/health -

Check for deployment issues

firebase functions:list --project=anava-ai

Readiness Check Failing

-

Identify failed dependency

curl -s https://api.anava.ai/ready | jq '.checks | to_entries[] | select(.value.status != "healthy")' -

Check individual service status

- Database: Check Firestore console

- MQTT: Verify broker VM status

- Storage: Check bucket permissions

- Auth: Verify Firebase Auth service

-

Review dependency latency

curl -s https://api.anava.ai/ready | jq '.checks | to_entries[] | {name: .key, latency: .value.latency_ms}'

High Latency

-

Check regional performance

- Run checks from multiple regions

- Compare latency across locations

-

Review dependency performance

- Check individual component latency

- Identify bottlenecks

-

Scale resources if needed

- Increase function memory/instances

- Review database indexes

Related Topics

- Backup & Recovery - Disaster recovery procedures

- Security Configuration - Security monitoring integration

- Upgrade Guide - Health checks during upgrades