Device Alerts

Anava's Device Alert System provides structured error reporting and health monitoring across your entire camera fleet. Every device continuously reports its status, enabling proactive maintenance and rapid issue resolution.

Key Capabilities

| Feature | Description |

|---|---|

| Real-time Alerts | Immediate notification of device issues |

| Severity Classification | CRITICAL, ERROR, WARNING, INFO levels |

| Fleet Dashboard | Aggregate view of all device health |

| Alert History | Full audit trail of device events |

| Auto-resolution Tracking | Know when issues self-heal |

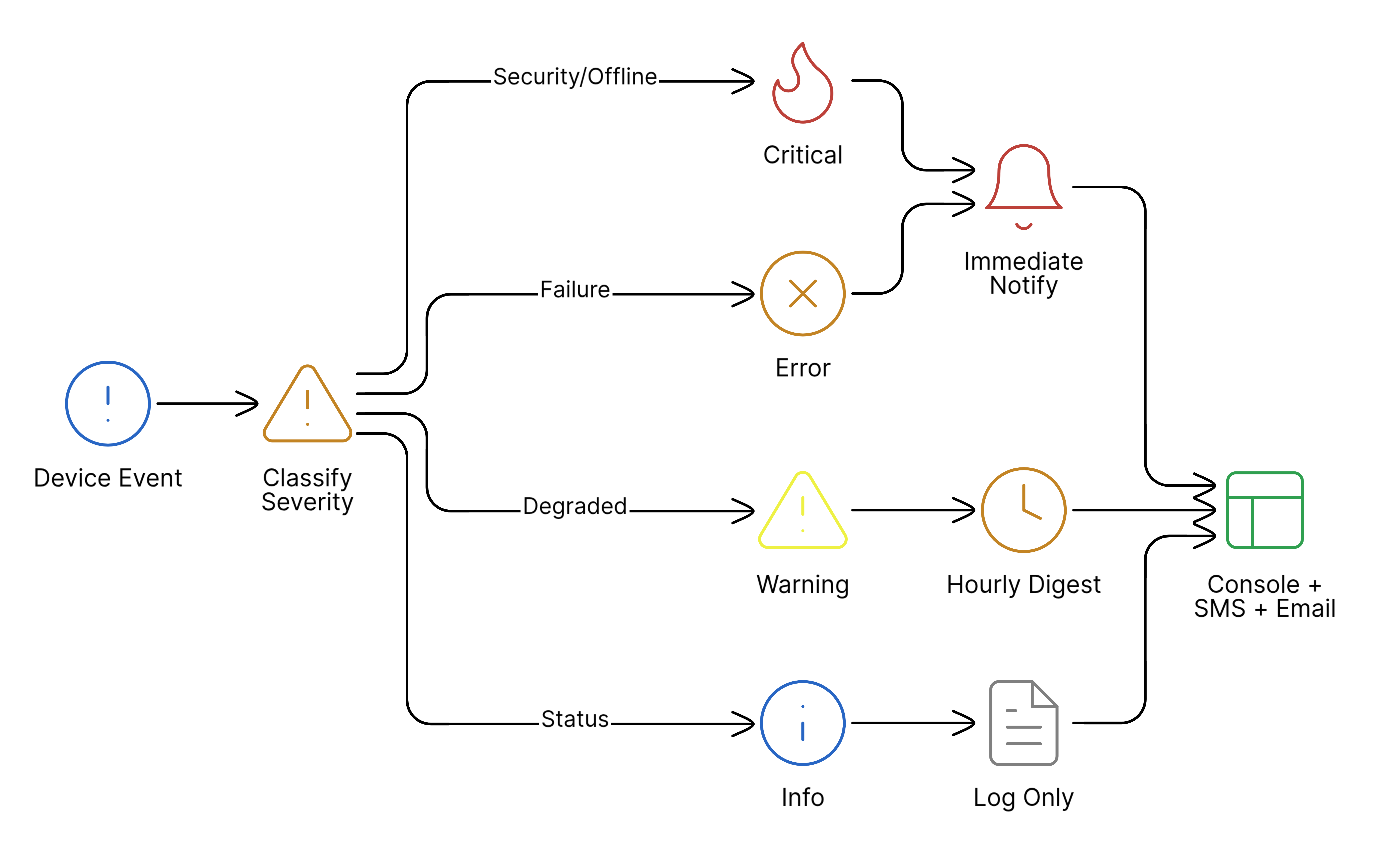

Alert Severity Levels

Severity Definitions

| Level | Response Time | Examples |

|---|---|---|

| CRITICAL | Immediate | Device offline, MQTT hijack attempt, certificate expired |

| ERROR | Within 1 hour | Stream failure, persistent auth failure, disk full |

| WARNING | Within 24 hours | High memory usage, repeated retries, config drift |

| INFO | No action needed | Successful restart, config applied, version update |

Alert Categories

Connectivity Alerts

| Code | Severity | Description |

|---|---|---|

CONN_001 | CRITICAL | MQTT connection lost (> 5 minutes) |

CONN_002 | ERROR | MQTT connection unstable (> 3 reconnects/hour) |

CONN_003 | WARNING | Network latency high (> 500ms) |

CONN_004 | INFO | Connection restored |

Security Alerts

| Code | Severity | Description |

|---|---|---|

SEC_001 | CRITICAL | Certificate validation failed |

SEC_002 | CRITICAL | Unauthorized broker connection attempt |

SEC_003 | ERROR | Certificate expiring (< 7 days) |

SEC_004 | WARNING | Multiple failed auth attempts |

Configuration Alerts

| Code | Severity | Description |

|---|---|---|

CFG_001 | CRITICAL | Critical configuration drift detected |

CFG_002 | WARNING | Configuration healed automatically |

CFG_003 | WARNING | Configuration conflict (repeated drift) |

CFG_004 | INFO | Configuration updated successfully |

Resource Alerts

| Code | Severity | Description |

|---|---|---|

RES_001 | ERROR | Memory usage critical (> 90%) |

RES_002 | WARNING | Memory usage high (> 80%) |

RES_003 | WARNING | Storage space low (< 100MB) |

RES_004 | INFO | Resource usage normalized |

Alert Payload Structure

Every alert follows a consistent JSON structure:

{

"alertId": "a1b2c3d4-e5f6-7890-abcd-ef1234567890",

"deviceId": "ACCC8EF12345",

"groupId": "warehouse-east",

"code": "CONN_001",

"severity": "CRITICAL",

"category": "connectivity",

"message": "MQTT connection lost for 5 minutes",

"timestamp": "2025-12-19T10:30:00Z",

"context": {

"lastConnected": "2025-12-19T10:25:00Z",

"brokerHost": "mqtt.anava.ai",

"reconnectAttempts": 12

},

"resolved": false,

"resolvedAt": null

}

Fleet Dashboard

The Anava Console provides a fleet-wide view of device health:

┌─────────────────────────────────────────────────────────────┐

│ Fleet Health Overview │

├─────────────────────────────────────────────────────────────┤

│ │

│ Total Devices: 247 Online: 243 (98.4%) │

│ │

│ ┌──────────┐ ┌──────────┐ ┌──────────┐ ┌──────────┐ │

│ │ CRITICAL │ │ ERROR │ │ WARNING │ │ INFO │ │

│ │ 2 │ │ 5 │ │ 12 │ │ 34 │ │

│ │ 🔴 │ │ 🟠 │ │ 🟡 │ │ 🔵 │ │

│ └──────────┘ └──────────┘ └──────────┘ └──────────┘ │

│ │

│ Recent Alerts: │

│ • 10:30 CRITICAL CONN_001 - Lobby Camera 1 offline │

│ • 10:28 ERROR SEC_003 - Parking Cam cert expiring │

│ • 10:15 WARNING CFG_002 - Dock 3 config healed │

│ │

└─────────────────────────────────────────────────────────────┘

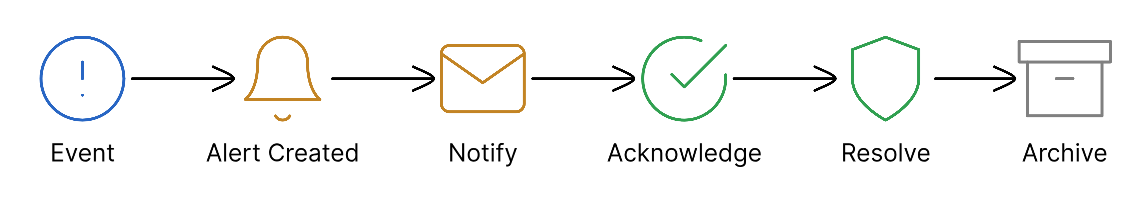

Alert Lifecycle

Alert States

| State | Description | Actions Available |

|---|---|---|

| Active | New alert, requires attention | Acknowledge, Resolve, Snooze |

| Acknowledged | User aware, working on it | Resolve, Add Note |

| Escalated | SLA breached, needs attention | Acknowledge, Resolve |

| Resolved | Issue fixed | View History, Reopen |

Notification Channels

Alerts can be delivered through multiple channels:

| Channel | CRITICAL | ERROR | WARNING | INFO |

|---|---|---|---|---|

| Console Dashboard | ✓ | ✓ | ✓ | ✓ |

| ✓ | ✓ | Optional | - | |

| Slack/Teams | ✓ | ✓ | Optional | - |

| SMS | ✓ | Optional | - | - |

| Webhook | ✓ | ✓ | ✓ | ✓ |

Configure notifications in: Console → Settings → Notifications

Alert Rules

Create custom alert rules to filter or escalate specific conditions:

# Example: Escalate offline cameras in critical areas

rule:

name: "Critical Area Offline"

condition:

code: "CONN_001"

group: ["entrance", "server-room", "executive"]

action:

escalate_to: "security-team"

notify: ["sms", "email"]

priority: "P1"

API Access

Query alerts programmatically:

# Get active alerts

curl -H "Authorization: Bearer $TOKEN" \

"https://api.anava.ai/v1/alerts?status=active"

# Get alerts for a device

curl -H "Authorization: Bearer $TOKEN" \

"https://api.anava.ai/v1/devices/ACCC8EF12345/alerts"

# Acknowledge an alert

curl -X POST -H "Authorization: Bearer $TOKEN" \

"https://api.anava.ai/v1/alerts/a1b2c3d4/acknowledge"

Best Practices

- Set up escalation policies - Don't let critical alerts go unnoticed

- Use alert groups - Organize devices by location/function for targeted notifications

- Review weekly - Check for patterns in warnings before they become errors

- Configure quiet hours - Reduce notification fatigue for non-critical alerts

- Integrate with existing tools - Use webhooks to connect to your incident management system

Related Documentation

- Alert Codes Reference - Complete list of alert codes

- Troubleshooting - Common alert scenarios and fixes

- ConfigGuardian Alerts - Configuration-specific alerts

Last updated: December 2025