Confidence Tuning

This guide explains how to configure confidence thresholds to balance detection sensitivity with false positive reduction.

Understanding Confidence Scores

Every Anava detection includes a confidence score indicating how certain the AI is about its analysis.

Score Ranges

| Range | Label | Meaning |

|---|---|---|

| 0-40% | Very Low | Uncertain, likely incorrect |

| 40-60% | Low | Some indicators, needs verification |

| 60-75% | Medium | Reasonable confidence |

| 75-90% | High | Likely correct |

| 90-100% | Very High | Almost certain |

Where Confidence Appears

- Object detections - Each object has a confidence score

- Question answers - Boolean/set answers may include confidence

- Overall session - Aggregate confidence for the analysis

Threshold Configuration

Profile-Level Threshold

Set in profile settings:

| Setting | Description | Default |

|---|---|---|

| Confirmation Threshold | Consecutive detections before TTS fires (1-10) | 1 |

When set:

- Requires N consecutive positive detections within a session before TTS triggers

- ONVIF events still emit immediately (not gated)

- Counter resets after TTS fires

- Counter is per-session (cleared when session ends)

Set to 2-3 to require multiple confirmations before voice response. This is especially useful in high-traffic areas where momentary detections may not warrant immediate TTS.

Object-Level Behavior

In skill configuration, each object can have:

- Rapid Eligible - Can trigger immediate response at high confidence

- Trigger Deep Analysis - Low confidence triggers additional analysis

Setting the Right Threshold

By Use Case

| Use Case | Recommended | Rationale |

|---|---|---|

| Weapon detection | 75-85% | Critical but can't miss |

| Intrusion detection | 80-90% | Balance speed and accuracy |

| PPE compliance | 70-80% | Some tolerance acceptable |

| Queue monitoring | 60-70% | Directional data OK |

| Analytics only | 50%+ | Capture all data |

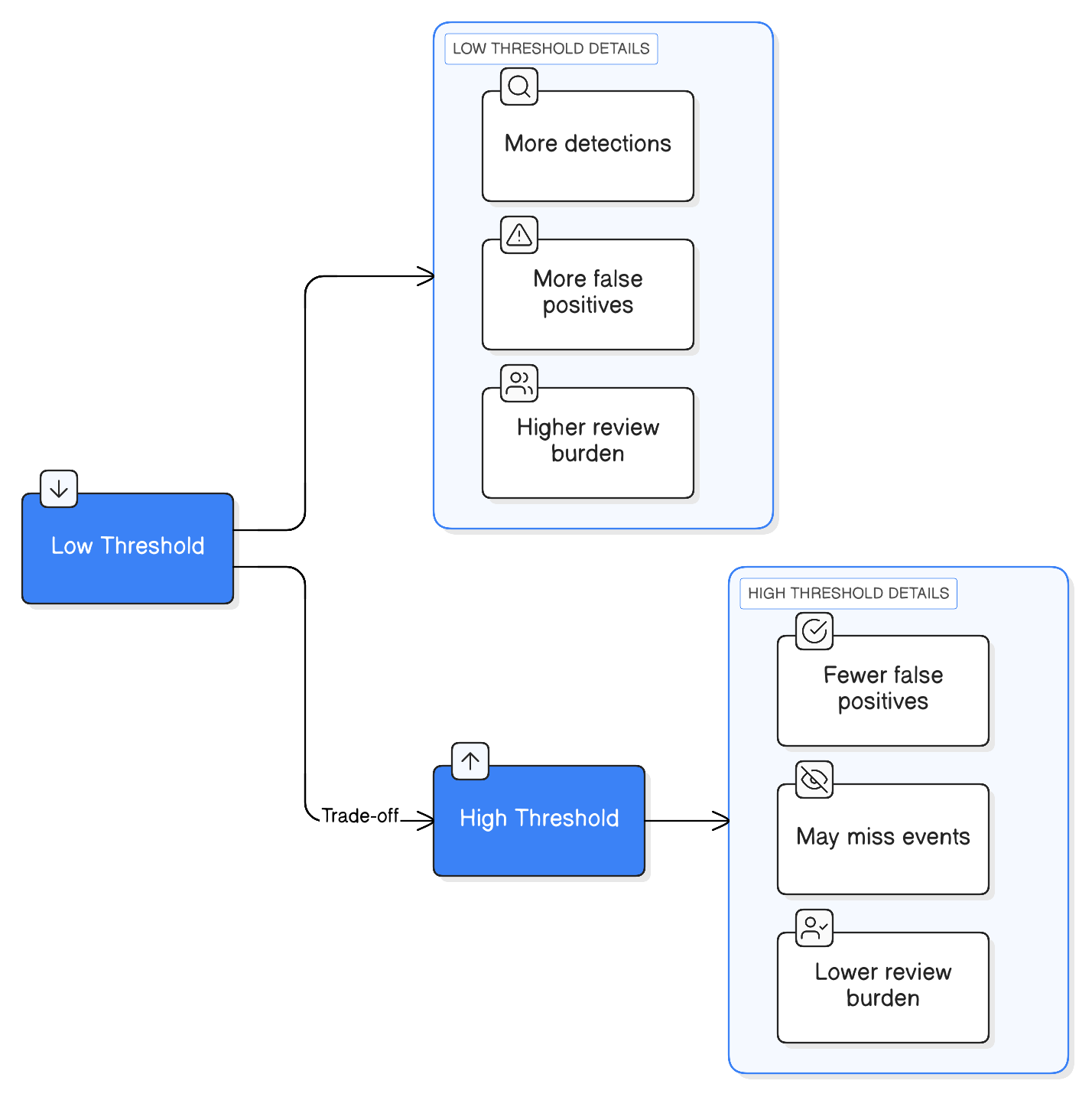

The Sensitivity Trade-off

Tuning Process

Step 1: Establish Baseline

- Set threshold to 0 (no filtering)

- Run for 24-48 hours

- Review all sessions

- Calculate baseline metrics

Step 2: Analyze Distribution

Review session confidence scores:

Sessions by Confidence:

├── 90-100%: 45 sessions (all correct)

├── 80-90%: 32 sessions (30 correct, 2 false positive)

├── 70-80%: 28 sessions (20 correct, 8 false positive)

├── 60-70%: 15 sessions (5 correct, 10 false positive)

└── <60%: 8 sessions (1 correct, 7 false positive)

Step 3: Set Initial Threshold

Based on analysis:

- If 80%+ sessions are accurate at 75%, set threshold to 75%

- Adjust based on false positive tolerance

Step 4: Monitor and Adjust

After setting threshold:

- Monitor for missed detections

- Review flagged but not alerted sessions

- Adjust threshold if needed

Advanced Configurations

Multi-Threshold Strategy

Use different thresholds for different responses:

| Confidence | Response |

|---|---|

| 90%+ | Immediate alarm + recording |

| 75-90% | Recording + email alert |

| 50-75% | Recording only |

| Under 50% | Log for review |

Implementation:

- Create multiple profiles with different thresholds

- Each profile triggers different VMS actions

Rapid Analysis for Critical Detections

For high-priority objects (weapons):

- Enable Rapid Eligible on the object

- High-confidence detections skip full analysis

- Immediate response for clear threats

- Full analysis continues for context

TTS Threshold

Separate from ONVIF threshold:

| Setting | Purpose |

|---|---|

| Profile Threshold | When to emit ONVIF events |

| TTS Confidence | When to trigger voice response |

Set TTS higher than ONVIF to reduce unnecessary announcements.

Troubleshooting Threshold Issues

Too Many False Positives

Symptoms:

- VMS flooded with events

- Action rules triggering on non-events

- Operator alert fatigue

Solutions:

- Increase threshold by 5-10%

- Review false positives for patterns

- Improve skill prompts

- Consider pre-filter

Missing Real Events

Symptoms:

- Known events not triggering

- Sessions show detection but no ONVIF

- VMS not receiving alerts

Solutions:

- Lower threshold by 5-10%

- Review missed events' confidence scores

- Improve prompts for clarity

- Consider separate high-sensitivity profile

Inconsistent Confidence

Symptoms:

- Same scenario gives wildly different scores

- Threshold that worked stops working

Solutions:

- Improve prompt specificity

- Add contextual information

- Check for environmental changes (lighting)

- Review recent false positives

Confidence by Object Type

Different objects may need different handling:

High-Confidence Objects

Objects where the AI is typically very certain:

- Person (clearly visible human)

- Vehicle (distinct shape)

- Fire/Smoke (obvious visual)

Recommendation: Threshold 80%+

Lower-Confidence Objects

Objects that are harder to classify:

- Weapon (context-dependent)

- PPE items (small, similar items)

- Behaviors (subjective)

Recommendation: Threshold 70-80% with verification

Seasonal and Environmental Adjustments

Lighting Changes

| Condition | Adjustment |

|---|---|

| Winter (low light) | Lower threshold 5-10% |

| Summer (bright) | Higher threshold possible |

| Indoor (consistent) | Standard threshold |

Weather Impact

| Condition | Effect | Adjustment |

|---|---|---|

| Rain | Reduced visibility | Lower threshold |

| Fog | Obscured details | Lower threshold + review |

| Snow | Reflection issues | Adjust prompts |

Best Practices

Start Conservative

Begin with higher threshold and adjust down:

- Easier to lower threshold than raise

- Avoids initial flood of false positives

- Builds operator trust in system

Document Changes

Keep record of:

- Threshold changes and dates

- Reason for change

- Observed impact

- Revert if needed

Regular Review

Schedule threshold review:

- Monthly for active deployments

- After major changes

- Following complaints/issues

Related Topics

- Tuning & Optimization - Overall tuning strategies

- Learning Mode - Active monitoring configuration

- Skills Reference - Object configuration